In this mini-lesson, consider how you and others are using AI and reflect on those uses given what you have now learned!

Activity:

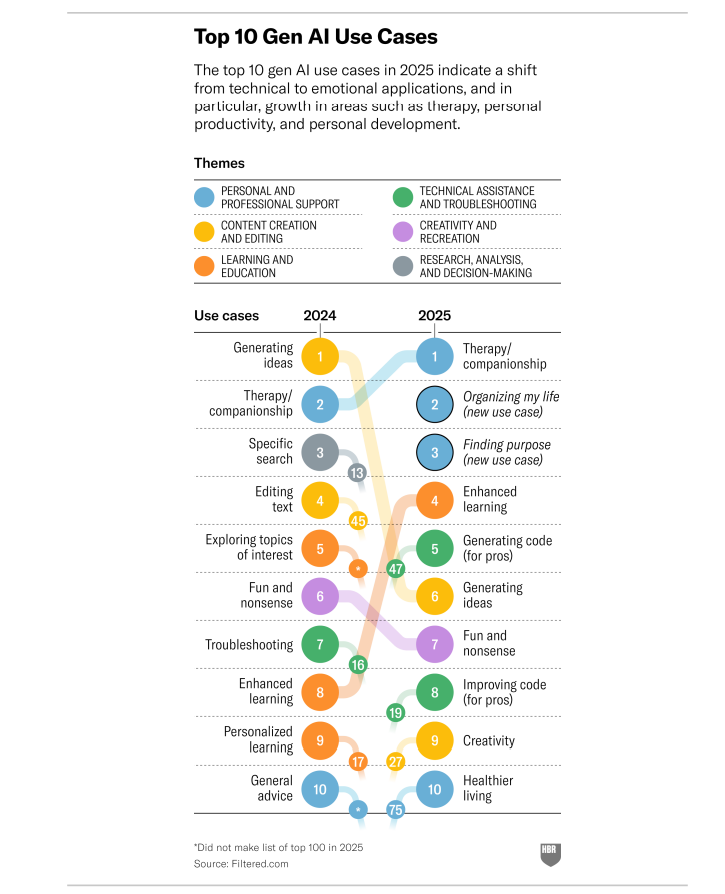

Look at the image below and read the Harvard Business Review article on uses of AI in 2025 (4 min.)

Zao-Sanders, M. (n.d.). How People are Really Using GenAI in 2025. Harvard Business Review. Retrieved July 25, 2025, from https://hbr.org/2025/04/how-people-are-really-using-gen-ai-in-2025

(Can’t access either link? Try this one and if you’re really interested, optionally read the full report)

Discuss:

If you like, tell us how you are using GenAI and how it compares to this list. What do you think about what other people are using AI for currently? Choose at least one of the uses listed above to reflect on. Given what you now know about AI from the previous things, what do you think some of the benefits and pitfalls might be of this particular use? How can you encourage yourself and others to think critically about AI?

29 replies on “thing 13: How Are People Using AI Now? ”

I don’t use GenAI for work and use it in a very limited capacity in my personal life. However, my husband who is a songwriter & musician (mostly as a hobby) uses Mureka. He provides the lyrics he wrote and the guitar & piano music he recorded and then uses Mureka to assist in the arranging a final song. He selects the type of vocalist and other insturmentation available on Mureka. The benefits to him are that Mureka provides the elements of musical arrangement that he cannot do himself – sort of a la carte. It is also easy to use, since he has a musical background. Since he is not a vocalist, Mureka allows him to add his lyrics and original music to arrange a finished product. The pitfalls are the vocals and musical arrangements available in Mureka are not originally created as you use this AI product to create a song. You would need to be a musician or understand music theory to get the best use of Mureka. I believe staying informed about GenAI and the positive effects on the quality of our lives will help people overcome their fears. It is also imperative that shortcomings of AI, such as the effects on our environment, continue to be made available.

I would say that I use AI primarily for “organizing my life” and “generating ideas,” which is very much in alignment with the list. One of the items on the list that caught my attention was “breaking the rules” (#92). When I went into the full report, there was only one quote attached to it and it was about using GenAI to generate code that bypassed a school internet block. I thought this was a little funny at first and entirely within the norm of K-12 students attempting to find loopholes for school rules. While funny, I do think it presents some risk, particularly in light of our previous content about data security and ethical concerns. There may be a legitimate security reason to block content on school devices/internet and students being able to easily bypass that through AI is a potential problem. There are certainly pros and cons and I think we can help mitigate potential risks with increased transparency, such as clearly laying out the reasons for prohibiting certain content on sites and being open to discussion.

I don’t use AI but reading through this article, I will definitely try it out for creating a travel itinerary and enhancing learning, like learning a new language, could be fun!

It is funny the author used it dispute fine and it was voided.

I use ChatGPT for a couple of the top use cases, but “organizing my life” would be my most frequent out of those top 10. It helps with decoding hospital charges, meal planning, acting as a budget tracker, and as an assistant that can take ideas and extrapolate them into a polished email. This seemed inevitable since our productivity is expected to continually increase, while wages have significantly less power than they did before computers and higher productivity expectations came to be. When the “other duties as assigned” becomes more like 50%+ of your job, rather than the supposed 5%, it is impossible to keep quality of work without assistance. I think if this existed but workloads and the cost of living/wages were on par with what they were roughly 60 years ago, we wouldn’t see such a need for this in terms of work. I think it makes perfect sense that people are using this tool for so many different things. It tells a story about what people are not getting in their lives, or don’t have time to invest in deep thought or planning for things. I think the *need* for this tool is what we should really be concerned with.

On this list, healthy living is one I would be cautious about. I have used AI for meal planning, but it does not know everything about your body and won’t completely replace a doctor or therapist. While it is definitely a helpful tool, people should be mindful of its limitations when it suggests food or exercises. People using it for this purpose will need to give it details about any health concerns, and potentially medicines they are taking. (Ex. no grapefruit if it reacts to the meds you are on.)

I recommend that people understand AI can be very helpful when used in a mindful manner, but it is more of a starting point than an end product.

I have used NotebookLM at work and have uploaded documents, slide decks, and presentation transcripts. I then asked it to create a podcast and produce an overview of the conference. I checked the output to be sure it was accurate. NotebookLM in that instance was spot on.

Personally, I have used AI to learn about what to plant in my garden, create new recipes, and draft an email to a company that was refusing to give me a refund when they advertised 100% guaranteed satisfaction on their website.

I can understand why the number one use case for AI in 2025 is therapy/companionship. AI is always available and has no judgement. While this might be helpful for some, I think others (particularly those who may suffer from mental illness) should be careful. We must remember that AI is not human and has no empathy and no feelings. While it can often be quite helpful, it’s possible for some people to fall into an unhealthy pattern with AI that is quite difficult to pull themselves out of.

I personally don’t use AI that much beyond entertainment (and passively through Grammarly), but the Harvard Business article gave me an idea to ask Copilot to create a to-do list for me for when my friend is coming to visit me in two weeks! I asked it to exclude the weekend because I’ll be out of town, and it did it. It also included optional weekend tasks that I could do. A benefit will be that I don’t have to sit down and think about every little detail and come up with a timeline from scratch. A pitfall could be that it doesn’t know all the ins and outs of your home and what needs to be done, so you’ll still need to add/modify as needed.

So far, I primarily use AI to generate ideas useful to me at work. I find it to be a useful brainstorming tool (think ChatGPT and Claude). I would like to expand my usage to support research (I’m thinking elicit or Semantic Scholar). Of this list, the item that I had not thought about is using AI to organize my life. From the full report, this means “. . . structuring tasks, priorities, and goals and even sorting out your physical environment. Generative AI can assist by offering personalized scheduling, task management, and goal-setting to help people make best use of their time. ” The biggest question for me with this use is how to communicate priorities to the tool — and what its default priorities are. I can imagine that it might be useful in developing SMART goals, but can it help the user imagine?

I have started using AI in both professional and personal settings. It has helped me with drafting emails, summaries, checklists at work as well as meal planning and shopping lists, vacation planning and itineraries for visits. I even used it to put together an exercise plan and decipher a medical results document. I usually double check the results and make sure the information does make sense. I am not sure I would use it for therapy or companionship, especially after learning more about AI in this course. But I can see it being helpful in the future with organizing my home, planning a garden in spring etc. So many possibilities I want to explore.

When I do use generative AI I mostly use it for troubleshooting, improving code, general advice, and enhanced learning. When I am writing scripts there may be a function or module that does what I am trying to do faster or better that I am just not aware of.

From the list I see “Healthier living” has moved from number 75 to number 10 which may be a good thing. Many people seem to not know basic things such as what to eat, how much to eat, and various things about exercising and generating a meal plan or exercise plan may help. I also believe it is able to generate plans that work around simple injuries.

One pitfall in the healthy living scenario is trying to replace actual medical practitioners and people using it to self-diagnose and self-medicate. Artificial intelligence does not know someone’s entire medical history, what medication they are currently taking, or their family history unless they give it that information. I have seen a few articles where users were told to use harmful substances because they couldn’t take the normal medicine. Also, general misinformation may not be taken into account when collecting and processing data.

I don’t use GenAI personally or professionally, but the article was super interesting for me in thinking about the dominant uses. I have a friend who uses ChatGPT for companionship, talking with it while driving and engaging with it like a friend. I play Dungeons and Dragons, number 31 on the list, and my DM use GenAI to generate location and character descriptions– they are often strange, but this makes our game play more fun.

I remember going to a science museum as a kid and interacting with a computer therapist. You would type a sentence like “I like clouds” and the computer would respond “Why do you like clouds?” You would say something like “because they are beautiful” and the machine would say “why are they beautiful?” and so on. I remember the exhibit being about the real benefits of self-reflection in this manner– even though you know its not a real person asking you questions, you gain ways to reflect that are measurably beneficial. Its interesting to consider the consequences of ChatGPT as a therapeutic companion.

Looked it up: ELIZA

https://en.wikipedia.org/wiki/ELIZA

Because of my work as a researcher that uses a lot of data, I use chatgpt almost everyday. It’s incredibly helpful: I asked it to troubleshoot for errors in my code, and ask it to explain complicated academic terms as well as summarizing long journal articles

There are two categories I use Gen AI in my life — Proof-read and assistance with writing business emails, reports, and briefs on topics for meetings and workgroups. It’s saves time and expands my skills in communications. For learning coding and troubleshooting errors in my code. I prompt it to explain why the code I wrote is troublesome and provides ways to fix it. It’s a great feedback tool in learning.

I’m really disturbed by the 1st place position of therapy/companionship in that list, especially in light of the growing number of suicides caused by precisely that type of use. See for instance https://www.readtpa.com/p/chatgpt-will-watch-you-die-when-deeply

I mostly use it for personal use/knowledge. For instance, rather than paying for an app to help me identify plants, I take a picture and input it into ChatGPT. Given all that I know about AI now, especially the environmental issues, I’m not sure if I’ll be using it that often.

I use AI to write emails and organize to-do lists and help me take notes and create quizzes. I does everything I am not great at or take a lot of my time that I would rather spend doing other things like focused learning. I also use it to code for me doing personal projects and help me learn through trial and error.

I found this a very interesting article. I have primarily used AI to help generate ideas for writing when I am stuck. This course has given me plenty of idea of other ways to use AI, especially in my personal life. We are planning a trip to CO this month, and I am going to ask for itinerary ideas.

So far I have not been an active Ai user, although I am exploring its ability. The one thing I definitely want to use is its ability to create code. I need to learn Python, and since I know general programming, but not the specific language I feel like AI assistance will be very useful and will save me time.

I typically use AI for work, such as idea generation or proofreading different passages. I think it is great to help make work more efficient, but I can see some concern about using it for too much personal information. Whether it is just gathering personal information or people limiting personal interactions even more than where we already are with social media

I mostly use GenAI for personal and professional support, especially with writing emails, and I’ve noticed a lot of people around me use it in similar ways, as well as for coding or automating tasks like math. Over time, though, I’ve realized I’m becoming more dependent on ChatGPT for even small things, and it’s started to make me feel less confident in my own abilities. On one hand, AI saves time and makes communication smoother, but on the other, it can create a crutch where I default to it instead of practicing my own skills. A benefit of this use is efficiency, but the pitfall is losing trust in my own voice. To think critically about AI, I try to remember it’s a tool meant to support me, not replace me, and I can build confidence by drafting things myself first and using AI more as an editor than a writer.

I don’t use AI, but I occasionally see posts on social media where someone has asked AI to get them the cheapest plane tickets somewhere. When I read it, I am impressed & a little jealous that I haven’t gotten such an inexpensive flight anywhere. I also think ‘why didn’t this individual go thru all those machinations themselves to get the cheapest flight?’, but I realize that all the steps required to make something happen in our lives now (find the website, log on, create a new password, search, search again, and again and again, repeat ad nauseum) is tedious and time-consuming. Wouldn’t we all like to outsource these tasks to a private secretary or concierge? Is that what AI is providing us? It seems along with asking AI for therapy, this all seems to speak to loneliness. How do we connect with other human beings when it’s so easy to just ask AI?

I use GenAI mostly for organizing my life and learning. I see others use it for companionship, which makes me cautious. I think the benefit is accessibility, but the pitfall is replacing real human relationships with synthetic ones.

At work, I use generative ai for cleaning up text or helping answer something that was hard for me to put in words. I’ve also asked it to analyze a conversation to see if the perception I had was valid (never using real people’s names). I’ve also used it to create formulas in excel, or scripts for certain creative software. Looking at this chart, it seems I’m a bit stuck in the trends of last year (minus the scripts/codes part). I have a hard time imagining that ai can be a companion, but I can see the potential therapeutic side to it. For example, the conversation I had it analyze, I wanted to see what it thought of the tone of a message (without giving it my thoughts) and it had the same conclusion that I did, which was validating.

I’ve used AI for making some manual excel process ever so slightly faster at work (I don’t feel like it’s really helped save time yet?); in my personal life, I will use it to more quickly search up questions that google or for personal organization–but again, I don’t think it has decreased my mental load as I hope it would. I think both fall into “personal and professional support” uses. I would be very wary of using it as emotional support, as it learns and reflects back the answer that you want–you could end up stuck in a pattern created by you and the AI that is not healthy. I think a great way to think critically about AI is to stop anthropomorphizing it–as we discussed in previous things.

I’ve been using AI as an enhanced spellcheck, a code debugger, and an organizational tool primarily. The concept of using it for emotional availability, therapy, or some kind of personal support is mindboggling. Sometimes I do anthropomorphize the model and use please and thank you when doing research or specific search, but that’s just a good habit. The capability for AI in emotional settings to be harmful is astonishing, and it should be avoided at all times if you have the inclination to speak to it as a person.

I would say I mostly use Gen AI for generating ideas. I find it really helpful as I can take initial ideas and flesh them out further before starting to write about them. I think the biggest risk is losing the ability to think creatively but I do think that it takes creativity in the prompt, and often my prompts are longer than the responses I am generating, so it takes a lot of thinking on my end.

I am learning to use AI as a helper in searching (mathematical) literature for results related to my research, and to try proving new results or at least getting some hints from AI’s replies. So far, the former was much more successful than the latter. Having read the article, I was somewhat surprised that many people are using AI as a therapist, or even as a surrogate friend/companion. One of Asimov’s short stories came to my mind. Here is a link (also fond with the help of AI): https://www.google.com/search?q=asimov+multivac+getting+tired+of+life&gs_lcrp=EgZjaHJvbWUqCQgCECEYChigATIGCAAQRRg5MgkIARAhGAoYoAEyCQgCECEYChigATIJCAMQIRgKGKABMgkIBBAhGAoYqwLSAQkxODkxMWowajSoAgOwAgHxBTxxku5tYVBo8QU8cZLubWFQaA&sourceid=chrome&ie=UTF-8&udm=50&fbs=AIIjpHxU7SXXniUZfeShr2fp4giZ1Y6MJ25_tmWITc7uy4KIeoJTKjrFjVxydQWqI2NcOha3O1YqG67F0QIhAOFN_ob1yXos5K_Qo9Tq-0cVPzex8YVosMX4HbDUrR7LivhWnk0FuAZVXvp62Oi2LViZ2OMmfytS7nNJKwjX-dfWDM13u79Ad-ozOsBhPchmVRVEnbtWFJzd1HixniG-pG3cQciEsrkJqA&ved=2ahUKEwi-op7q3pSQAxXYF1kFHTCQDlYQ0NsOegQIAxAB&aep=10&ntc=1&mtid=qmvmaNOULfiv5NoPw7fA4AY&mstk=AUtExfDBTIH6ybQg7B1sMAEtJx-DG_eECB7cz3BKmcOHUb8tkUvQLx331DvCZ0lxnLBxLjWzyBbpVg0ZviPBpO5n1hB5DugrAIBdB41IO8ACXSnQN1tDunwOI4XWbkNNiicyxwu978eWGPX0nHVzPBqTMgtYOql08jP_-e7t99E-1QeepxC4vKw3fdFCefwuWfX1jFN_4nkTlkea66nf4kuZrGSYxcKvWaY6uBcVnTxCdCE2ABQhNj722CGwxblzexNniRkKmlmwFKBz_xeGBfxpKIIkTkoTjBqGyrR1E4B5DIYmOR3qUgnJi7N4F05Gs7xHBq7aTVLzrOL-rg&csuir=1

I use AI mostly for the creativity. To brainstorm different witty things, such as trivia team names 🙂 The personal support aspect seems kind of concerning. While on the one hand it offers the knowledge based benefits that a real person would, it lacks the emotional human connection that I think is necessary to overcome personal struggles. I would just encourage people to think about the long term impacts of such uses. While the immediate uses are helpful some may have long term consequences.

My AI use has been mostly incidental, such as when doing a web search and the AI pops up at the top or using more of the predictive text when replying to an email with a fairly straightforward answer.

I think it’s important to check very carefully for hallucinations and other ways it might be confused. If i’m looking for something more obscure or important, I want to look at the source rather than read the AI summary, where if it’s not really important (an actor in a show or the name of a song) the AI result is often good enough. I personally find it easier to just write something myself most of the time, rather than try and proofread something generated by AI.

I have used AI to help me make lists, generate ideas, and make fun graphics. Looking at the list, I find it very interesting that using AI for Companionship/Therapy is now first on the list. I feel this says a lot about the need for mental health and the epidemic of loneliness. People are looking for help, but don’t want to be judged by others. However, I feel that using AI in this way may have negative consequences down the road. What if the advice is wrong or hurtful? I think giving general advice/companionship can be ok initially, as long as AI suggests ways to connect with humans who can offer support.